4.3 Modelling multiple features

With just one feature, our classification model is not very accurate at predicting reply, so we will now extend it to handle multiple features. We can do this by changing the model so that multiple features contribute to the score for an email. We just need to decide how to do this, which involves making an additional assumption:

- A particular change in one feature’s value will cause the same change in score, no matter what the values of the other features are.

Let’s consider this assumption applied to the ToLine feature and consider changing it from 0.0 to 1.0. This assumption says that the change in score due to this change in feature value is always the same, no matter what the other feature values are. This assumption can be encoded in the model by ensuring that the contribution of the ToLine feature to the score is always added on to the contributions from all the other features. Since the same argument holds for each of the other features as well, this assumption means that the score for an email must be the sum of the score contributions from each of the individual features.

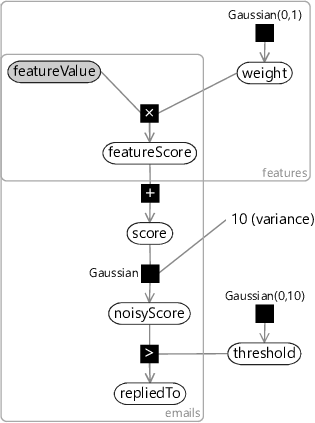

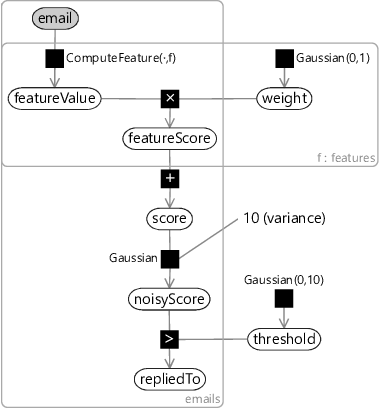

So, in our multi-feature model (Figure 4.5), we have a featureScore array to hold the score contribution for each feature for each email. We can then use a deterministic summation factor to add the contributions together to give the total score. Since we still want Assumptions0 4.3 to hold for each feature, the featureScore for a feature can be defined, as before, as the product of the featureValue and the feature weight. Notice that we have added a new plate across the features, which contains the weight for the feature, the feature value and the feature score. The value and the score are also in the emails plate, since they vary from email to email, whilst the weight is outside since it is shared among all emails.

We now have a model which can combine together an entire set of features. This means we are free to put in as many features as we like, to try to predict as accurately as possible whether a user will reply to an email. More than that, we are assuming that anything we do not put in as a feature is not relevant to the prediction. This is our final assumption:

- Whether the user will reply to an email depends only on the values of the features and not on anything else.

As before, now that we have a complete model, it is a good exercise to go back and review all the assumptions that we have made whilst building the model. The full set of assumptions is shown in Table 4.3.

- The feature values can always be calculated, for any email.

- Each email has an associated continuous score which is higher when there is a higher probability of the user replying to the email.

- If an email’s feature value changes by , then its score will change by for some fixed, continuous weight.

- The weight for a feature is equally likely to be positive or negative.

- A single feature normally has a small effect on the reply probability, sometimes has an intermediate effect and occasionally has a large effect.

- A particular change in one feature’s value will cause the same change in score, no matter what the values of the other features are.

- Whether the user will reply to an email depends only on the values of the features and not on anything else.

Assumptions0 4.1 arises because we chose to build a conditional model, and so we need to always condition on the feature values.

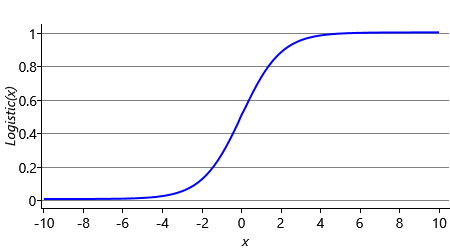

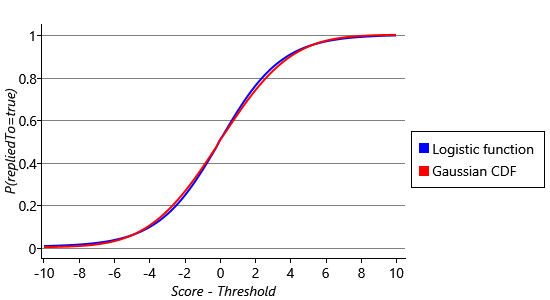

In our model, we have used the red curve of Figure 4.3 to satisfy Assumptions0 4.2. Viewed as a function that computes the score given the reply probability, this curve is called the probit function. It is named this way because the units of the score have historically been called ‘probability units’ or ‘probits’ [Bliss, 1934]. Since regression is the term for predicting a continuous value (in this case, the score) from some feature values, the model as a whole is known as a probit regression model (or in its fully probabilistic form as the Bayes Point Machine [Herbrich et al., 2001]). There are other functions that we could have used to satisfy Assumptions0 4.2 – the most well-known is the logistic function, which equals and has a very similar S-shape (see Figure 4.6 to see just how similar!). If we had used the logistic function instead of the probit function, we would have made a logistic regression model – a very widely used classifier. In practice, both models are extremely similar – we used a probit model because it allowed us to build on the factors and ideas that you learned about in the previous chapter.

Assumptions0 4.3, taken together with Assumptions0 4.6, means that the score must be a linear function of the feature values. For example, if we had two features, the score would be . We use the term linear, because if we plot the first feature value against the second, then points with the same score will form a straight line. Any classifier based around a linear function is called a linear classifier.

Assumptions0 4.4 and Assumptions0 4.5 are reasonable statements about how features affect the predicted probability. However, Assumptions0 4.6 places some subtle but important limitations on what the classifier can learn, which are worth understanding. These are explored and explained in Panel 4.2.

Finally, Assumptions0 4.7 states that our feature set contains all of the information that is relevant to predicting whether a user will reply to an email. We’ll see how to develop a feature set to satisfy this assumption as closely as possible in the next section, but first we need to understand better the role that the feature set plays.

Features are part of the model

To use our classification model, we will need to choose a set of features to transform the data, so that it better conforms to the model assumptions of Table 4.3. Another way of looking at this is that the assumptions of the combined feature set and classification model must hold in the data. From a model-based machine learning perspective, this means that the feature set combined with the classification model form a larger overall model. In this way of looking at things, the feature set is the part of this overall model that is usually easy to change (by changing the feature calculation code) whereas the classification part is the part that is usually hard to change (for example, if you are using off-the-shelf classifier software there is no easy way to change it).

We can represent this overall combined model in a factor graph by including the feature calculations in the graph, as shown in Figure 4.7. The email variable holds all the data about the email itself (you can think of it as an email object). The feature calculations appear as deterministic ComputeFeature factors inside of the features plate, each of which computes the feature value for the feature, given the email. Notice that, although only email is shown as observed (shaded), featureValue is effectively observed as well since it is deterministically computed from email.

If the feature set really is part of the model, we must use the same approach for designing a feature set, as for designing a model. This means that we need to visualise and understand the data, be conscious of assumptions being represented, specify evaluation metrics and success criteria, and repeatedly refine and improve the feature set until the success criteria are met (in other words, we need to follow the machine learning life cycle). This is exactly the process we will follow next.

Review of concepts introduced on this page

regressionThe task of predicting a real-valued quantity (for example, a house price or a temperature) given the attributes of a particular data item (such as a house or a city). In regression, the aim is to make predictions about a continuous variable in the model.

logistic functionThe function which is often used to transform unbounded continuous values into continuous values between 0 and 1. It has an S-shape similar to that of the cumulative Gaussian (see Figure 4.6).

linear functionAny function of one or more variables which can be written in the form . A linear function of just one variable can therefore be written as . Plotting against for this equation gives a straight line which is why the term linear is used to describe this family of functions.

References

[Bliss, 1934] Bliss, C. I. (1934). The Method of Probits. Science, 79(2037):38–39.

[Herbrich et al., 2001] Herbrich, R., Graepel, T., and Campbell, C. (2001). Bayes Point Machines. Journal of Machine Learning Research, 1:245–279.

Excel

Excel CSV

CSV