Preface

Today, machine learning is being applied to a growing variety of problems in a bewildering variety of domains. When doing machine learning, a fundamental challenge is connecting the abstract mathematics of a particular machine learning technique to a concrete, real-world problem. This book tackles this challenge through model-based machine learning. Model-based machine learning is an approach which focuses on understanding the assumptions encoded in a machine learning system, and their corresponding impact on the behaviour of the system. The practice of model-based machine learning involves separating out these assumptions being made about a real-world situation from the detailed mathematics of the algorithms needed to do the machine learning. This approach makes it easier to both understand the behaviour of a machine learning system and to communicate this to others. Much more detail on what model-based machine learning is and how it can help are described in the introduction chapter entitled “How can machine learning solve my problem?”.

This book is unusual for a machine learning text book in that we do not review categories of algorithms or techniques. Instead, we introduce all of the key ideas through case studies involving real-world applications. Case studies play a central role because it is only in the context of applications that it makes sense to discuss modelling assumptions. Each case study chapter introduces a real-world application and solves it using a model-based approach. In addition, a first tutorial chapter explores a fictional problem involving a murder mystery.

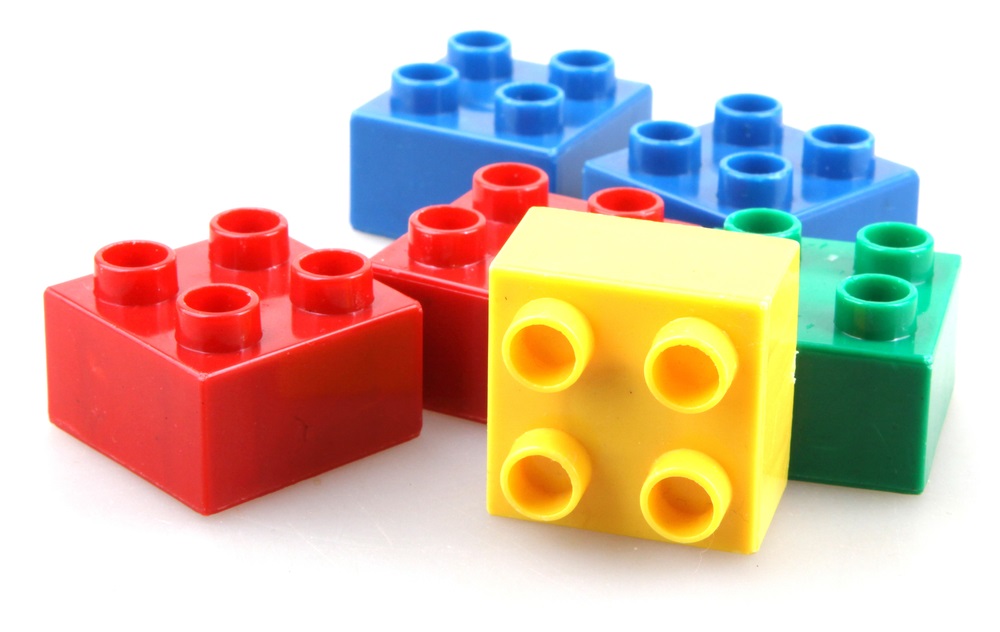

Only a few building blocks are needed to construct an infinite variety of models.

Each chapter also serves to introduce a variety of machine learning concepts, not as abstract ideas, but as concrete techniques motivated by the needs of the application. You can think of these concepts as the building blocks for constructing models. Although you will need to invest some time to understand these concepts fully, you will soon discover that a huge variety of models can be constructed from a relatively small number of building blocks. By working through the case studies in this book, you will learn what these components are and how to use them. The aim is to give you sufficient appreciation of the power and flexibility of model-based approach to allow you to solve your machine learning problem.

Who this book is for

This book is intended for any technical person who wants to use machine learning to solve a real-world problem or who wants to understand why an existing machine learning system behave the way it does. The focus of most of the book is on designing models to solve problems arising in real case studies. The final chapter “How to read a model” looks instead at using model-based machine learning to understand existing machine learning techniques.

Some more mathematically minded readers will want to understand the details of how models are turned into runnable algorithms. We have separated these parts of the book, which require more advanced mathematics, into deep-dive sections. Deep-dive sections are marked with panels like the one below. These sections are optional – you can read the book without them.

Technical sections which dive into the details of algorithms will be marked like this. If you just want to focus on modelling, you can skip these sections.

How to read this book

Each case study in this book describes a journey from problem statement to solution. You probably do not want to follow this journey in a single sitting. To help with this, each case study is split into sections – we recommend reading a section at a time and pausing to digest what you have learned at the end of each section. To help with this, the machine learning concepts introduced in a section will be highlighted like this and will be reviewed in a small glossary at the end of each section. We aim to provide enough details of each concept to allow the case studies to be understood, along with links to external sources, such as Bishop [2006], where you can get more details if you are interested in a particular topic.

Each introductory section of the book also includes a self assessment, consisting of hands-on, practical exercises. You can use these exercises to test your own understanding of the concepts introduced in the corresponding section. Rather than being purely mathematical exercises, these are generally more open-ended assessments with the aim of getting into the right mindset for thinking about assumptions and machine learning model development. Most exercises are designed to allow self-checking, for example by comparing the results of one exercise with another. For some exercises, it can be helpful to work through them with a partner, so you can compare notes on your answers and discuss any assumptions you have made.

Online book and code

This book has an online version at mbmlbook.com which complements the paper version and has additional material and functionality. For example, the online format enables interactive model diagrams, popup definitions of terms and allows the data behind any plot to be downloaded. The online version also includes contact details for providing feedback and reporting errata, and will always be up-to-date with corrections.

In addition, all the results in the book can be reproduced using the accompanying source code. This code is open source and is freely available at github.com/dotnet/mbmlbook.

Acknowledgements

First and foremost, I would like to thank the major contributors without whom this book would not have happened. Christopher Bishop helped develop the initial concept and structure for this book and also contributed several chapters. Each case study chapter was a significant machine learning project in itself, requiring the development of a model to solve a genuine real-world problem – Tom Diethe, John Guiver and Yordan Zaykov took on these projects: gathering data, writing code, running experiments, solving problems and producing all the results that you see in each chapter. They all also provided detailed and thoughtful discussion and feedback on the chapters themselves.

The staff at CRC Press have been hugely supportive and helpful in the final stages of preparing this book for publication. I’d particularly like to thank my editors, first John Kimmel and later Lara Spieker for their unwavering support in guiding this book home.

I am grateful to Microsoft for giving me the freedom to work on this book, as well as providing the stimulating research environment which has allowed me to develop my machine learning skills and understanding for the last twenty years. My Microsoft colleagues have also been invaluable in providing detailed feedback on many aspects of this book. In Cambridge, Tom Minka, Sebastian Blohm, John Bronskill, Andy Gordon, Sam Webster, Alex Spengler, Andrew Fitzgibbon, Elena Pochernina, Matteo Venanzi, Boris Yangel, Jian Li and Jonathan Tims have all provided valuable discussion and feedback on early drafts. I want particularly to thank Pashmina Cameron for her very detailed and thoughtful feedback which really helped improve the quality and clarity of many chapters. More widely across Microsoft, Jim Edelen, Alex Wetmore, Tyler Gill, Max Bovykin, Fedor Zhdanov, Li Deng, Michael Shelton, Emmanuel Gillain and Bahar Bina have provided very useful commentary especially on the early chapters and on the exercises.

Outside of Microsoft, I am indebted to Angela Simpson and Adnan Custovic for their collaboration on the project which led to the Chapter 6 case study on childhood asthma, and also for their comments on that chapter. Other collaborators in the healthcare AI space, Damian Sutcliffe and Andres Floto, have also given much valuable feedback on this chapter and others. Thanks also to Dr Sarah Supp for kindly contributing her photo of baked goods to inspire novel data visualisations in Chapter 2.

The online version of this book was launched early on in the writing process and has been invaluable in gathering feedback throughout. I owe a debt of gratitude to Nick Duffield for the graphic design of the online version and to Andy Slowey, Nathan Jones, Ian Kelly for keeping it up and running over the years. I am also hugely grateful to Dmitry Kats and Alexander Novikov for their hard work in open sourcing the accompanying code, and for making substantial improvements to it in the process.

Very many online readers have corrected typos and provided feedback, questions and positive comments, all of which has been immensely helpful and encouraging. For taking the time to pause reading and write back, I’d like to thank: Yousry Abdallah, Marius Ackerman, Tauheedul Ali, Ali Arslan, Luca Baldassarre, Chethan Bhateja, Marcus Blankenship, Glen Bojsza, Subhash Bylaiah, Aurelien Chauvey, Joh Dokler, Vladislavs Dovgalecs, Peter Dulimov, Daniel Emaasit, Gordon Erlebacher, Hon Fai Choi, Tavares Ford, Eric Fung, Shreyas Gite, Chiraag Gohel, Craig Gua, Guy Hall, Jonathan Holden, Veeresh Inginshetty, Mohammed Jalil, Lin Jia, Brett Jones, Oleg Karandin, Joakim Grahl Knudsen, Veysel Kocaman, Michael Landis, John Lataire, Josh Lawrence, Dustin Lee, Vincent Lefoulon, Ben Lefroy, Mark Legters, Martina Lutterová Štúrova, Andrew MacGinitie, Tegan Maharaj, John Marino, Kyli McKay-Bishop, Arthur Mota Gonçalves, Moritz Münst, Takuya Nakazato, Hiske Overweg, Francisco Pereira, Benjamin Poulain, Venkat Ramakrishnan, Martin Roa Villescas, Marwan Sabih, Hammad Saleem, Lucian Sasu, Yurii Shevchuk, Sphiwe Skhosana, Vivek Srinivasan, David Steinar Asgrimsson, Gijs Stoeldraaijers, Agnieszka Szefer, Yousuke Takada, Matthew E. Taylor, Martin Thøgersen, Udit Tidu, Levente Torok, Benjamin Tran Dinh, Tavi Truman, Edderic Ugaddan, Ron Williams, Ted Willke, Marat Zaynutdinov and Mark Zhukovsky.

Finally, my deepest gratitude to my wife Ellen for her unflagging support and inspiration during the many years it has taken to write this book.

[Bishop, 2006] Bishop, C. M. (2006). Pattern Recognition and Machine Learning. Springer.