2.3 Loopiness

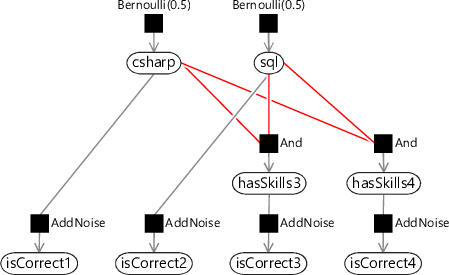

Let’s now extend our model slightly by adding a fourth question which needs both skills. This new factor graph is shown in Figure 2.14, where we have added new isCorrect4 and hasSkills4 variables for the new question. Surely we can also do inference in this, very slightly larger, graph using belief propagation? In fact, we cannot.

Loops can be challenging.

The problem is that belief propagation can only send a message out of an (unobserved) node after we have received messages on all other edges of that node (Algorithm 2.1). Given this constraint, we can only send all the messages in a graph if there are no loops, where a loop is a path through the graph which starts and finishes at the same node (without using the same edge twice). If the graph has a loop, then we cannot send any of the messages along the edges of the loop because that will always require one of the other messages in the loop to have been computed first.

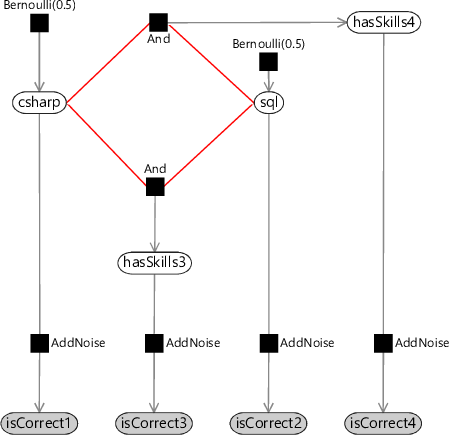

If you look back at the old three-question factor graph (Figure 2.5) you’ll see that it has no loops (a graph with no loops is called a tree) and so belief propagation worked without problems. However, our new graph does have a loop, which is marked in red in Figure 2.14. To do inference in such a loopy graph, we need to look beyond standard belief propagation.

To perform inference in loopy graphs, we need to get rid of the loops somehow. In this toy example, we could notice that hasSkills3 and hasSkills4 are the same and remove one of them. Such a simple solution is unlikely to be available in real problems. Instead, there are various general-purpose methods to remove loops, as described in Panel 2.2. Unfortunately, all these methods typically become too slow to use when dealing with large factor graphs. In most real applications the graphs are very large but inference needs to be performed quickly. The result is that such exact inference methods are usually too slow to be useful.

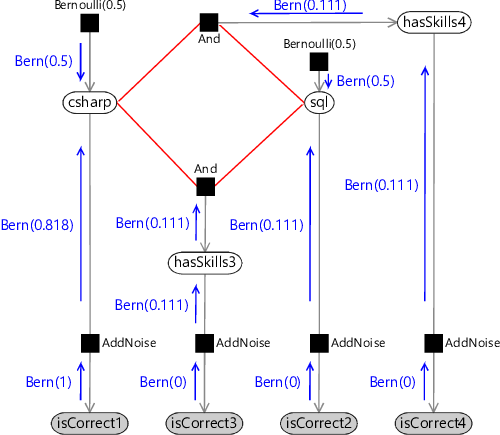

The alternative is to look at methods that compute an approximation to the required marginal distributions, but which can do so in much less time. In this book, we will focus on such approximate inference approaches, since they have proven to be remarkably useful in a wide range of applications. For this particular loopy graph, we will introduce an approximate inference algorithm called loopy belief propagation.

Loopy belief propagation

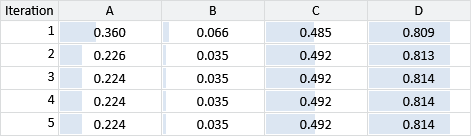

Loopy belief propagation gives the marginal distributions for csharp and sql as Bernoulli(0.809) and Bernoulli(0.010) respectively. If we use an exact inference method to compute the true posterior marginals, we get Bernoulli(0.800) and Bernoulli(0.024), showing that our approximate answers are reasonably close to the exact solution. For the purposes of this application, we are interested in whether a candidate has a skill or not but can tolerate the predicted probability being off by a percentage point or two, if it can make the system run quickly. This illustrates why approximate inference methods can be so useful when tackling large-scale inference problems. However, it is always worth investigating what inaccuracies are being introduced by using an approximate inference method. Later on, in section 2.5, we’ll look at one possible way of doing this.

Another reason for using approximate inference methods is that they let us do inference in much more complex models than is possible using exact inference. The accuracy gain achieved by using a better model, that more precisely represents the data, usually far exceeds the accuracy loss caused by doing approximate inference. Or as the mathematician John Tukey put it,

“Far better an approximate answer to the right question… than an exact answer to the wrong one.”

Review of concepts introduced on this page

loopA loop is a path through a graph starting and ending at the same node which does not go over any edge more than once. For example, see the loop highlighted in red in Figure 2.15a.

loopy graphA graph which contains at least one loop. For example, the graph of Figure 2.14 contains a loop, which may be seen more clearly when it is laid out as shown in Figure 2.15a. Loopy graphs present greater difficulties when performing inference calculations – for example, belief propagation no longer gives exact marginal distributions.

exact inferencean inference calculation which exactly computes the desired posterior marginal distribution or distributions. Exact inference is usually only possible for relatively small models or for models which have a particular structure, such as a tree. See also Panel 2.2.

approximate inferencean inference calculation which aims to closely approximate the desired posterior marginal distribution, used when exact inference will take too long or is not possible. For most useful models, exact inference is not possible or would be very slow, so some kind of approximate inference method will be needed.

convergedThe state of an iterative algorithm when further iterations do not lead to any change. When an iterative algorithm has converged, there is no point in performing further iterations and so the algorithm can be stopped. Some convergence criteria must be used to determine whether the algorithm has converged – these usually allow for small changes (for example, in messages) to account for numerical inaccuracies or to stop the algorithm when it has approximately converged, to save on computation.

message-passing scheduleThe order in which messages are calculated and passed in a message passing algorithm. The result of the message passing algorithm can change dramatically depending on the order in which messages are passed and so it is important to use an appropriate schedule. Often, a schedule will be iterative – in other words, it will consist of an ordering of messages to be computed repeatedly until the algorithm converges.

Self assessment 2.3

The following exercises will help embed the concepts you have learned in this section. It may help to refer back to the text or to the concept summary below.

- Draw a factor graph for a six-question test which assesses three skills. Identify all the loops in your network. If there are no loops, add more questions until there are.

- For your six-question test, design a message-passing schedule which uses as few initial messages as possible (one per loop). Remember that a message cannot be sent from a node unless messages have been received on all edges connected to that node (except for observed variable nodes).

- Extend your three question Infer.NET model from the previous self assessment, to include the fourth question of Figure 2.14. Use the TraceMessages attribute to see what messages Infer.NET is sending and confirm that they match the schedule and values shown in Table 2.5. If you get stuck, you can refer to the source code for this chapter [Diethe et al., 2019].

References

[Lauritzen and Spiegelhalter, 1988] Lauritzen, S. L. and Spiegelhalter, D. J. (1988). Local Computations with Probabilities on Graphical Structures and Their Application to Expert Systems. Journal of the Royal Statistical Society, Series B, 50(2):157–224.

[Pearl, 1988] Pearl, J. (1988). Probabilistic Reasoning in Intelligent Systems. Morgan Kaufmann, San Francisco.

[Suermondt and Cooper, 1990] Suermondt, H. and Cooper, G. F. (1990). Probabilistic inference in multiply connected belief networks using loop cutsets. International Journal of Approximate Reasoning, 4(4):283 – 306.

[Frey and MacKay, 1998] Frey, B. and MacKay, D. (1998). A revolution: Belief propagation in graphs with cycles. In In Neural Information Processing Systems, pages 479–485. MIT Press.

[Minka et al., 2014] Minka, T., Winn, J., Guiver, J., Webster, S., Zaykov, Y., Yangel, B., Spengler, A., and Bronskill, J. (2014). Infer.NET 2.6. Microsoft Research Cambridge. http://research.microsoft.com/infernet.

[Diethe et al., 2019] Diethe, T., Guiver, J., Zaykov, Y., Kats, D., Novikov, A., and Winn, J. (2019). Model-Based Machine Learning book, accompanying source code. https://github.com/dotnet/mbmlbook.